Today’s scientific data must be accessible now and in the future to maximise its value to society. Funding providers increasingly require grant recipients to have a data management plan to ensure funded research outputs are findable, re-usable, interoperable, and the research is reproducible (FAIR principles). To support this need for long-term preservation specialised repositories have developed, taking some of the burden of curation from PIs and providing a long-term home for the data. Over its 30-year history, GEOFON, hosted in Section 2.4, has become well-known as a safe archive for seismic waveform data from over 230 different seismic networks. This month, this work was recognised by CoreTrustSeal’s award of “Trusted Repository” status.

Obtaining this status required a detailed assessment to ensure that sound procedures are in place for all aspects of data curation—ingestion, acceptance, and preservation. Independent CoreTrustSeal reviewers evaluated data management practices, as well as administrative and technical aspects of data centre operations. Certification helps assure the community that geophysical data will be valued and available in future for reproducibility to allow the community to explore new uses and to support long term studies.

In practice, the GEOFON team assists PIs with preparing their datasets for archiving, carefully creating descriptive metadata to ensure a rich “landing page” for each archived network and provide all the context information such as instrument types that is needed for future scientific exploration. Data can be retrieved in bulk and large quantities through web services or explored interactively.

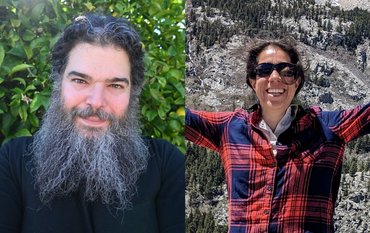

GEOFON data curator Susanne Hemmleb has long been a familiar point of contact for many PIs archiving at GEOFON. She said “We have data from about 16000 stations around the world, and keeping this priceless set of observations available for our partners is very important to us.” Doing so requires considerable storage and backup capacity, as well as continued good network access. “Preserving and managing access to that growing archive, currently ~200 TB, is a huge task, which we can only succeed in through collaboration with GFZ’s Data & Information Management (Section 5.1) and IT Services and Operations (Section 5.3) units, the Helmholtz Open Science team, and the Karlsruhe Institute of Technology’s Scientific Computing Center. We are grateful to the many contributors who have worked with us to archive their data thus far.” she added.

Professor Frederik Tilmann, speaker for GFZ’s Modular Earth Science Infrastructure (MESI), explained that trusted data repositories are an essential resource required for modern geophysical research, including driving data mining and artificial intelligence approaches. “Importantly, specialised data centres such as the GEOFON data centre are the backbone for federated multi-disciplinary e-infrastructures such as EPOS, the European Plate Observing system, which allow scientists to seamlessly retrieve different types of data from multiple sources. Furthermore, the new infrastructures we are building, such as the establishment of sub-sea monitoring with telecommunication cables in project SAFAtor critically depend on the enhancements to metadata management and delivery being built at GFZ” he said.

Also leveraging EU projects seeking to widen access, especially Geo-INQUIRE, the GEOFON team are constantly improving their services by providing new instrument types, integrating AI applications, cloud computing and related services, and contributing to global harmonisation of standards. Not to mention working towards recertification in 2028!

Related link: “2028-12-03 - GEOFON - CoreTrustSeal Requirements 2023-2025.” DataverseNL. https://doi.org/10.34894/BIEFEB

Related link: “GFZ Seismological Data Archive: Networks archived at GEOFON” GEOFON. https://geofon.gfz.de/waveform/archive/

![[Translate to English:] Susanne Hemmleb (right) and her GEOFON colleague Peter Evans (both Section 2.4) hold the CoreTrus](/fileadmin/_processed_/0/a/csm_cert0677_CoreTrustSeal_Geofon-02_7297240a18.jpeg)

![[Translate to English:] [Translate to English:] Metallbrücke, die zum Teil gebrochen ist und durch die ungewöhnlich viel schlammiges Wasser fließt](/fileadmin/_processed_/9/6/csm_2026_01_22_Bridge_broken_due_to_flash_flooding_in_North_Sumatra_25_Nov_2025_cbe62be46b.jpeg)

![[Translate to English:] Tim Schöne in front of his scientific poster](/fileadmin/_processed_/5/b/csm_Tim_Schoene_EGU_Wien_2025_3cb7b450f2.jpeg)

![[Translate to English:] Group photo with 8 people in a seminar room in front of a screen.](/fileadmin/_processed_/2/1/csm_20251114_News_EU-Water-Resilience-Exchange_Kreibich_c-xx_db4e5be690.jpeg)

![[Translate to English:] Portrait photo, blurry background](/fileadmin/_processed_/a/2/csm_2025_11_06_JEAN_BRAUN_HE_Helmholtz_Portraits-23_2b5c35beee.jpeg)

![[Translate to English:] Excerpt from a map of the Phlegraean Fields near Naples, Italy: Left: Red dots mark smartphone sensors, yellow triangles mark fixed seismological stations. Right: The area is coloured in shades of yellow, red and purple according to the amplification of seismic waves.](/fileadmin/_processed_/3/b/csm_20251028_PM_Smartphone-Earthquake_Slider_12500fa0e6.jpeg)

![[Translate to English:] Green background, portrait of Heidi Kreibich](/fileadmin/_processed_/1/1/csm_20251023_Kreibich-Heidi-2025-Vollformat-green_web_-c-Michael-Bahlo_72946c7fe4.jpeg)